Fluorescence microscopy allows researchers to study specific structures in complex biological samples. However, the image created with fluorescent probes suffers from blurring and background noise. The latest work from NIBIB researchers and their collaborators presents several novel image restoration strategies that create sharp images with significantly reduced processing time and computing power.1.

The cornerstone of modern image processing is the use of artificial intelligence, particularly neural networks that use deep learning to remove blur and background noise from an image. The basic strategy is to teach the deep learning network to predict what a blurry and noisy image would look like without the blur and noise. The network must be trained to do this with large data sets of pairs of sharp and blurred versions of the same image. A major barrier to using neural networks is the time and expense required to create large training data sets.

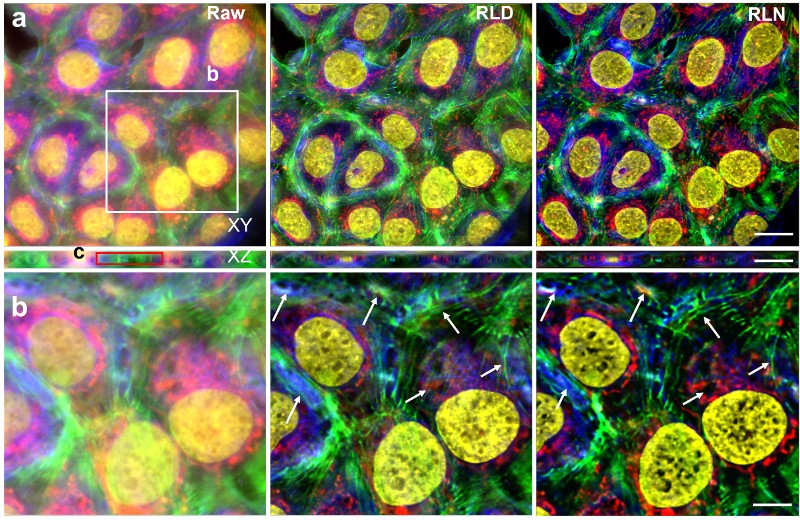

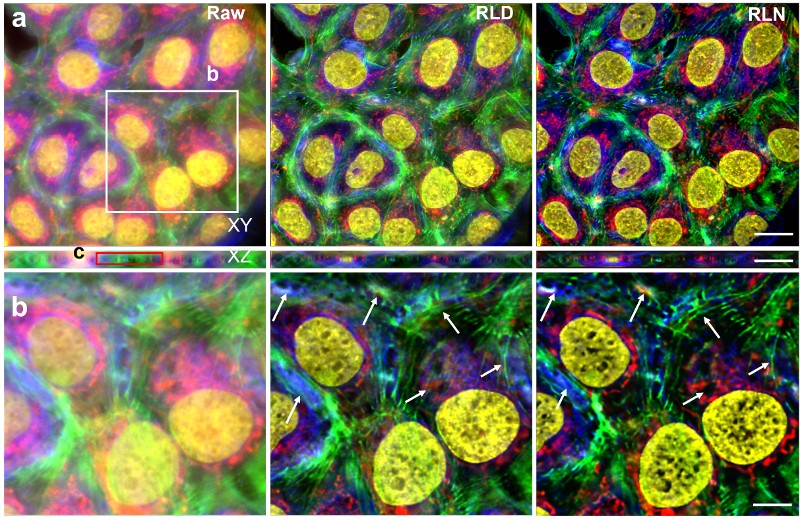

Before the use of neural networks, images were cleaned (known as deconvolution) using equations. Richardson-Lucy Deconvolution (RLD) employs an equation that uses knowledge of the blur introduced by the microscope to clarify the image. The image is processed through the equation repeatedly to improve it further. Each step through the equation is known as an iteration, and many iterations are needed to create a clear picture. The resources and time needed to run an image through many iterations are the main drawback of the RLD approach.

RLD is considered to be physics-driven because it describes the physical properties that cause blurring and noise in an image. Neural networks are said to be data-driven because they must look at many images (data) to know what constitutes a clear or blurry image. The NIBIB team sought to take advantage of the advantages (and mitigate the disadvantages) of each method by combining them. The result is a neural network that also uses RLD: a Richardson-Lucy network (RLN).

By design, a neural network detects features in matching pairs that will help it learn how to clear up a blurry image. Interestingly, the scientists who designed these networks are generally unaware of the specific features the network uses to achieve this feat. What is known is that the features detected by the network are based, at least in part, on physical properties of the microscope and can therefore be represented by equations.

The team developed a training regimen that incorporates RLD-like equations into the neural network that add information about the physical properties that distort the image. The most useful equations are recycled through the network, speeding up the learning process. Therefore, iterative RLD equations were incorporated into the neural network to create RLN.

“We consider this approach to ‘guide’ the learning process of the neural network,” explains Yicong Wu, Ph.D., lead author of the study. “Simply put, the guide helps the network learn more quickly.”

Tests using images of worm embryos showed that RLN improved both training and processing time compared to other deep learning programs currently in use. The number of parameters required to train the network using RLN was drastically reduced from several million to less than 20,000. The processing time to obtain clear images of embryos was also greatly reduced: RLN took only a few seconds to resolve an image, compared to 20 seconds and several minutes for other popular neural networks.

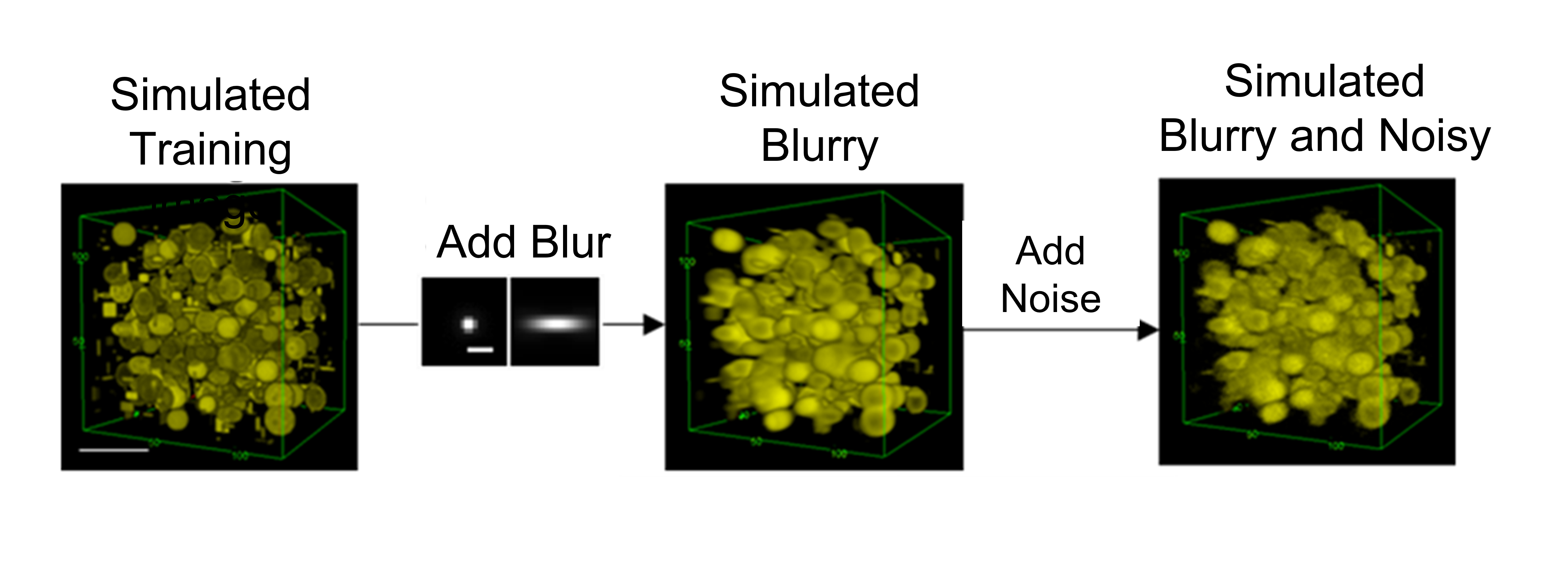

Although RLN speeds up the training process, the clear and blurred image data sets required to train the network are difficult to obtain or create from scratch. To address the problem, the researchers ran the RLD equations in reverse to quickly create synthetic data sets for training. The computer-generated images were created with a mixture of points, circles and spheres, called mixed synthetic data. Based on the measurements of the defocused cells, blur was added to the synthetic images. Background noise was also added to create blurry and noisy images of the computer-generated synthetic shapes. The clear and fuzzy synthetic mixed shape pairs were used to train a neural network to restore real images of live cells. The experiment demonstrated that RLN trained on synthetic data outperformed RLD in creating clear images of out-of-focus cells. Surprisingly, RLN clarified many fine structures in the images that RLD could not detect.

“The success we are having with using synthetic data to train neural networks is very exciting,” explained Hari Shroff, Ph.D., one of the study’s lead authors. “Creating or obtaining data sets for training has been a huge bottleneck in image processing. This combination of the findings of this work – that synthetic data really works, especially when used with RLN – has the potential to usher in a new era in image processing that we are vigorously pursuing.”

The group is very excited about another aspect of the work. They found that synthetic data sets created to restore images of a specific subject, such as living cells, could also restore blurred images of completely different images, such as human brains. They describe this as the “generalization” of synthetic training. The team is now moving full speed ahead to see how far that generalization can be taken to accelerate the creation of high-quality images for biological research.

The work was supported by NIH internal laboratories, the National Institute of Biomedical Imaging and Bioengineering, and the National Institute of Mental Health with additional funding from the NIH Office of the Director, the National Institute of General Medical Sciences, and the National Institute of Neurological Disorders and Stroke. Additional support was provided by the National Key Research and Development Program of China, the National Natural Science Foundation of China, the Talent Program of Zhejiang Province, Woods Hole Marine Biological Laboratories, and HHMI. This work used the computational resources of the NIH HPC Biowulf cluster (https://hpc.nih.gov).

-Written by Thomas Johnson, Ph.D.